Professional Services

Cloud Run service on Google Cloud Platform

This article is about the Cloud Run service on Google Cloud Platform. It will be of most interest to you if you’re looking into services to run your application on - Cloud Run might suit you well. Whether you’re anything from a beginner hobby developer or a full-blown development team lead, you might find this interesting.

Read some use-cases for Cloud Run, tips and tricks, pitfalls to avoid, and comparisons to other services.

Google’s description of what you can do with Cloud Run is:

Develop and deploy highly scalable containerized applications on a fully managed serverless platform.

This service allows its users to simply deploy OCI containers, and those container services automatically scale up and down on demand, they are monitored and their outputs are logged, all automatically. The only thing the user needs to care about is the container.

What Cloud Run is ideal for

Cloud Run is absolutely perfect for the type of applications that tick all the boxes below:

- Self-contained

- Stateless

- Containerised/containerizable

- Low start time

- Not used 24h per day - e.g. used only internally by a company in a limited TZ range

- Where the absolute lowest possible latency is not needed

Generally, such applications would be:

- REST API backends

- Internal tools/websites

- Quick demos

And if the extra boxes below are also ticked, it’s just that much better:

- Written in the Go language (see Go containers from SCRATCH)

- Profiled performance

What Cloud Run is not for

Aside from the exact opposites of the things in the “What Cloud Run is ideal for”, there are both general and specific use-cases that are just not for Cloud Run at all.

- SQL databases - just... don’t. They are not stateless, they generally suffer from not being on the same instance as their filesystem, and they cannot scale.

- Applications with cache warmups - unless it’s asynchronous, but still, not recommended

- Applications requiring multiple processes - it is possible, but really not recommended

Cloud Run vs. other GCP services

Cloud Run vs. App Engine

App Engine is a service that is way older than Cloud Run (2008 vs. 2019), and it also was, and, well, still is, a slightly different type of service, though they both are PaaS. App Engine was also the first ever Cloud service by Google - it’s even older than Google Drive.

There are two types of App Engine: Standard and Flexible.

Cloud Run vs. App Engine Uptime SLA: 99.95% vs 99.95%

AE Standard Environment

The App Engine Standard Environment is a runtime-constricted platform. Originally, in the First Gen, one could only deploy Python applications, but this was expanded with choices of Go, Java, JavaScript (Node.js), PHP, and Ruby in the Second Gen.

A file, called app.yaml, is the sole addition that needs to be made to a codebase of one of those languages. The file defines the runtime choice, environment variables, scaling, instance type (RAM, CPU), and path handlers (some HTTP paths can be served from a static directory), and the application entrypoint - by default, the entrypoint is main:app for Python 3.

AE Flexible Environment

The App Engine Flexible Environment is way closer functionally to Cloud Run than Standard.

While the Flexible Environment has a set of predefined runtimes just like Standard, plus .NET, it also has the option to supply a custom runtime via a Dockerfile.

There is, however, a slight difference between this and Cloud Run still: You still have to supply the app separately from the runtime; the major advantage of this is that you can have a stable runtime container that perhaps won’t change that often that is completely separate from the application’s code, and thus application developers cannot change the Container file, even if they’d want to.

Cloud Run vs. Container VMs + Autoscaling MIGs and Load Balancers

Container VMs, or more specifically Compute Engine VMs running the Container-Optimized OS from Google, allow for configurations nearly identical to Cloud Run, with the underlying infrastructure being slightly more in the customer’s hands, but with more customization options and a different price.

Now, what are MIGs? Obviously not the Russian MiG airplanes. MIG stands for Managed Instance Groups. Very simply, they’re groups of VMs that can be connected to Load Balancers. Couple that with an autoscaler, which can change the amount of the instances based on metrics directly from the instances, such as overall CPU utilization, and you’ve reached the same kind of autoscaling that Cloud Run offers.

VMs do in general have a minimal cost as long as they’re running. In a typical scenario, in a managed, autoscaling instance group, you would have atleast one VM running all the time to serve the requests (as opposed to scaling them from zero on-demand which in the case of VMs would take seconds, if not minutes, to serve the first request). If your application serves people from around the world with multi-millions of requests per day, it might be worth it to use VMs. Use the Google Cloud Platform Pricing Calculator to find out.

On GCP specifically, using VMs has one advantage in the form of them being in a VPC. A VPC is pretty much a standard network with subnets, and is meant to be used for the internal communication of Compute services within GCP. Since the VMs are in the VPC network directly, they do not need a Serverless VPC Connector to connect to other internal resources. Such internal resources might be a Memorystore (Redis) instance running for your caching needs, and that is only accessible through an internal IP which is in the VPC as well. The Serverless VPC Connector has some minimal pricing per month, but it’s still more than just not needing to pay for such service thanks to using VMs that are in a VPC themselves.

There are several details that you will have to manage when using this setup as opposed to just using Cloud Run:

- Autoscaling settings - when new instances are to be created, based on what metrics (CPU %, Load Balancer RQ/S, etc.)

There are some things you will also have to manage, but only if you’re not going to be using the Container-specialized OS by Google or other OSs provided by default, and that is the “Guest environment”:

- Logging & Monitoring - you might have to install the agents that send that data to internal endpoints on GCP

- Low-level system settings - DHCP, rsyslog, and udev being the most critical things that you really want working well in any environment, really, especially in a virtual one

- OS Login - to use GCP’s web SSH, and to manage accesses to it via your project’s or organization’s IAM settings

Tips and Tricks

Automating deployments

There are two things that need to be done in order to even have a deployment in the first place, which can subsequently be very easily automated.

First, there’s the infrastructure, beginning with the GCP project to contain all the resources, and then Cloud Run, etc. itself.

Infrastructure

The most common tool to maintain cloud infrastructures currently is Terraform.

Terraform is a IaC (Infra as code) tool which reads resource definitions - which are written in HCL - and deploys them on the Cloud platform one desires.

There isn’t a correct way to write Terraform code, but there are best practices.

In the case of Cloud Run, or most GCP services, you could either use...

- Pure Terraform resources from the

googleprovider, specifically google_cloud_run_service- The disadvantage of using pure resources is that there tend to be extra resources that you might not even think about if you’ve never deployed the services through IaC/APIs. Extra data & resources required: google_iam_policy, google_cloud_run_service_iam_policy.

- The advantage of using these is that you can use exactly what you want and need, and nothing more. This might not necessarily be a point only for using pure resources, as some modules can be so well written that they share this.

- Modules - there isn’t one by Google for Cloud Run, but there is an excellent module that creates GCP projects, including some things that can be considered a part of the project that otherwise are separate resources, such as API enablements.

- Modules aren’t limited to the ones that are hosted on platforms such as GitHub, but also local modules that are simply in a directory that is separate from your main code.

- Modules can be used multiple times, and/or can be coded so that based on their input variables, one or more of a kind of a specific resource are created so as to avoid invoking the module multiple times. The latter case is beneficial when a module has some kind of a unique, shared resource, such as an IAM policy which can be applied to multiple repeating resources.

The example below contains the three resources required for a basic, public Cloud Run service, with input variables so that the code can be used as a module.

variable "project" { type = string description = "The GCP project's ID to deploy resources to"}variable "region" { type = string description = "The GCP region to deploy resources to"}variable "service_name" { type = string description = "The name of the Cloud Run service"}variable "container_url" { type = string description = "The URL of a container to deploy the Cloud Run service with. E.g.: gcr.io/cloudrun/hello"}variable "maximum_scaling" { type = number description = "The maximum number of Cloud Run instances to scale up to"}variable "minimum_scaling" { type = number description = "The minimum number of Cloud Run instances running at all times"}variable "publicly_accessible" { type = bool description = "Allow unauthenticated connections (if false, only users in the project's IAM with the proper permissions can connect to the service, via IAP)" default = true}variable "service_account_name" { type = string description = "The name (email) of the GCP Service Account that the service should run as" default = null}variable "container_command" { type = list(string) description = "Command to execute in container (overrides container's ENTRYPOINT)" default = []}variable "container_args" { type = list(string) description = "Arguments to command" default = []}variable "timeout_seconds" { type = number description = "Maximum amount of time the container can spend responding to a request" default = 60}variable "port" { type = number description = "The port number to send requests to the container on"}variable "env" { type = list(object({ key = string value = string })) description = "Environment variable key value pairs" default = []}variable "vpc_connector_self_link" { type = string description = "self_link value of the google_vpc_access_connector for allowing Cloud Run to communicate internally in VPC" default = null}// Cloud Runresource "google_cloud_run_service" "main" { project = var.project name = var.service_name location = var.region template { spec { containers { image = var.container_url command = var.container_command args = var.container_args ports { container_port = var.port } dynamic "env" { for_each = var.env content { name = env.value["key"] value = env.value["value"] } } } service_account_name = var.service_account_name timeout_seconds = var.timeout_seconds } metadata { annotations = { "autoscaling.knative.dev/maxScale" = var.maximum_scaling "autoscaling.knative.dev/minScale" = var.minimum_scaling "run.googleapis.com/vpc-access-connector" = var.vpc_connector_self_link "run.googleapis.com/vpc-access-egress" = var.vpc_connector_self_link != null ? "private-ranges-only" : null } } } traffic { percent = 100 latest_revision = true } autogenerate_revision_name = true}// Resources to attach the invoke permission for allUsers (public) to Cloud Rundata "google_iam_policy" "cloud_run_public" { binding { role = "roles/run.invoker" members = [ "allUsers", ] }}resource "google_cloud_run_service_iam_policy" "noauth" { count = var.publicly_accessible == true ? 1 : 0 location = google_cloud_run_service.main.location project = google_cloud_run_service.main.project service = google_cloud_run_service.main.namepolicy_data = data.google_iam_policy.cloud_run_public.policy_data}

To deploy the infrastructure above, you’ll need

- A Service Account in the form of a

.jsonfile- The SA has to have IAM binding (permissions) to the project that you’re going to be deploying to. For simplicity, give it Editor, but note that it’s very permissive and that you’ll need to safeguard the credentials to that account.

- Terraform itself

- An environment variable set to the location of your credentials

.jsonfile (on bash and similar shells:export GOOGLE_CREDENTIALS="/home/user/src/app/credentials.json") - Finally, you can run

terraform plan -out planfileandterraform apply planfilein the directory with the Terraform code.

Building containers standalone

If you do not have a preferred pre-existing way of automatically building your container images, consider using GCP’s native tools to do that.

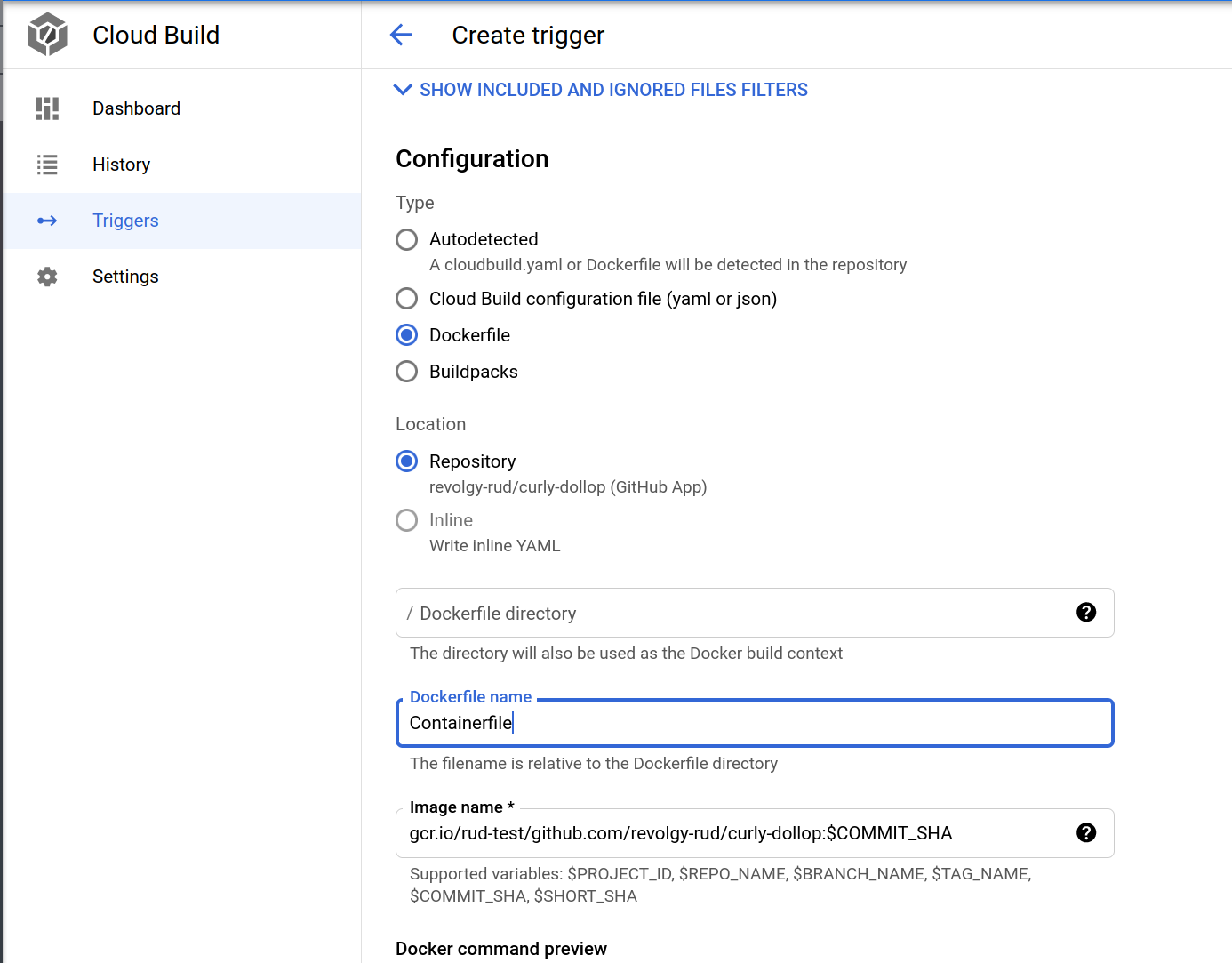

Google’s Cloud Build service can automatically create images based on your Containerfile / Dockerfile, and upload the resulting container directly into Google Container Registry (GCR, gcr.io).

To do this, you need to either...

- Have a repository hosted directly on Google Cloud Source Repositories

- You can setup a mirror from almost any major hosting platform into your CSR repo

- Have a GitHub repository connected to your GCP project

And then you can create a Cloud Build trigger

Building & deploying containers

This part is sort of optional, and it’s mutually exclusive with the “Building containers standalone” section above. You can deploy the container images to Cloud Run by supplying a new link to the container to Terraform when deploying through that.

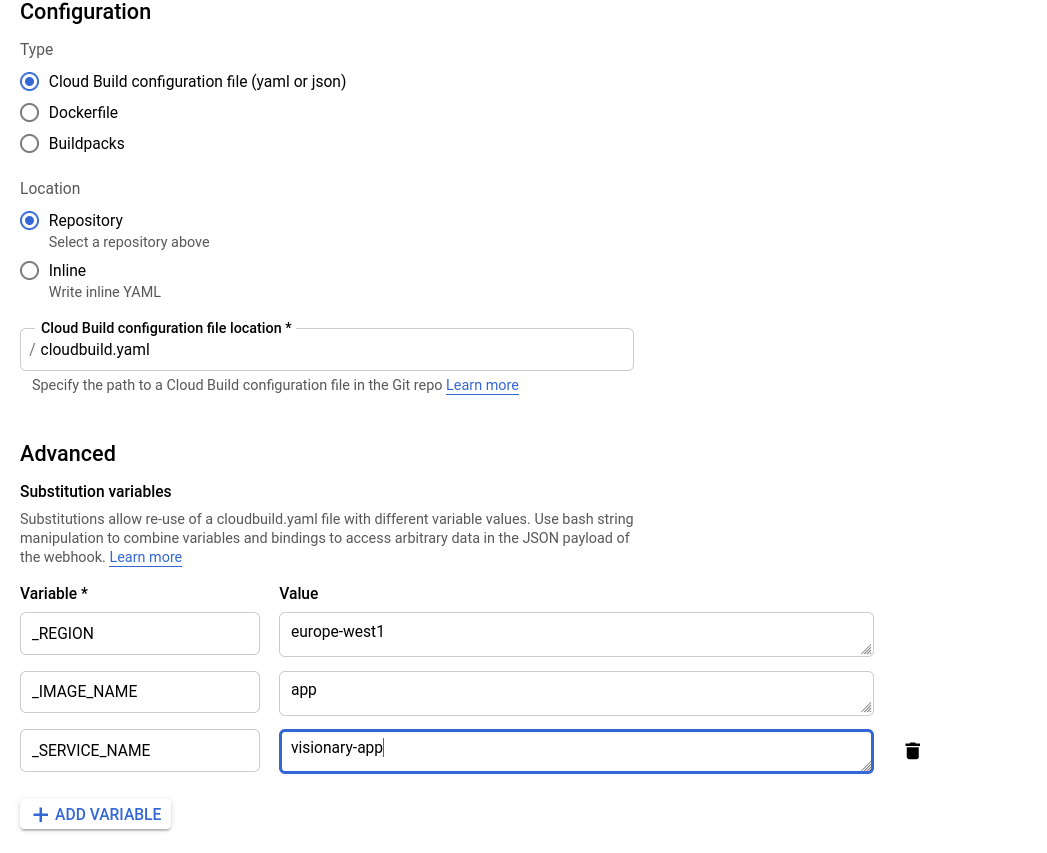

If you don’t want to do container deployments via Terraform, you can also use Cloud Build, though this time it’s a bit more involved.

Your Git project has to contain a file called cloudbuild.yaml, which should look roughly like this:

---steps: - name: 'gcr.io/cloud-builders/docker' args: ['build', '-t', "gcr.io/${PROJECT_ID}/${_IMAGE_NAME}", '.'] - name: 'gcr.io/cloud-builders/docker' args: ['push', "gcr.io/${PROJECT_ID}/${_IMAGE_NAME}"] - name: 'gcr.io/google.com/cloudsdktool/cloud-sdk' entrypoint: gcloud args: ['run', 'deploy', "${_SERVICE_NAME}", '--image', "gcr.io/${PROJECT_ID}/${_IMAGE_NAME}", '--region', "${_REGION}"]images: - gcr.io/$PROJECT_ID/$_IMAGE_NAME

This will:

- Build the container

- Push it to GCR

- Deploy a new revision

If you look at each of those steps, you’ll find out that they’re just normal CLI commands, such as docker build -t [gcr.io/example/app](<http://gcr.io/example/app>) ., gcloud run deploy visionary-app --image gcr.io/example/app, etc. You can run those on your own machine, as long as you’re sufficiently authenticated.

Some variables, such as $PROJECT_ID, are in each build by default. Link

Other variables, specifically those that start with a _, are user-supplied. (note: user-supplied vars have to start like that).

When creating a trigger in Cloud Build, you’ll need to supply the values.

Go containers from SCRATCH

Skip this part if you don’t use the Go language.

Utilise the power of the Go toolchain, which by default builds apps as statically linked, which means they can run without the presence of libraries - or really anything other than Linux itself, meaning the Container is tiny.

In the file below, you can see that Git is installed and that go.mod and go.sum are added, but in the resulting container - after the FROM scratch line - there’ll only be SSL certificates and the single binary that is the app itself.

# This Containerfile will result in a minimal container in the end.FROM docker.io/golang:1.15-alpine AS builderWORKDIR /go/src/appRUN apk add --no-cache git# Add the code filesCOPY ./main.go ./go.mod ./go.sum ./RUN go get -d ./...# Build the Go app without Cgo, which allows Go to call C code.RUN env CGO_ENABLED=0 GOOS=linux go build -o app mainCMD ["app"]# Start a new, completely empty container creationFROM scratch# Copy the SSL certificates over, otherwise things like HTTPS won't work.COPY --from=builder /etc/ssl /etc/ssl# Copy over the app, which is statically compiled by default during go build.COPY --from=builder /go/src/app/app /appCMD ["/app"]

Pitfalls

When using Terraform

When you’re planning a Terraform deployment, make sure to include the whole URL of the container in GCR in the container_url variable, so that it looks like this: gcr.io/example-project/app@sha256:ef1b210d2c040ef8135177ba0113220b0bfa1962ca894846b2a960ecf3de1b9c

and not like this:

gcr.io/example-project/app

Otherwise a new revision won’t be created, and you might just find out that your new app changes have not made an appearance.

Port numbers

Cloud Run always serves requests from the container one exactly one port. That port number has to be set on the infrastructure, and the app has to listen to it.

The app should listen to whatever number is defined in the PORT environment variable, i.e. it should not listen on a fixed port. You can ofcourse set a fixed port number if port is not defined.

Summary

So far you’ve seen:

- What Cloud Run is for, and what it’s not for

- Cloud Run vs. App Engine

- How to deploy via Terraform

- How to deploy via Cloud Build

- How to have minimal container when deploying apps written in Go

- ... and some tips and tricks and lore

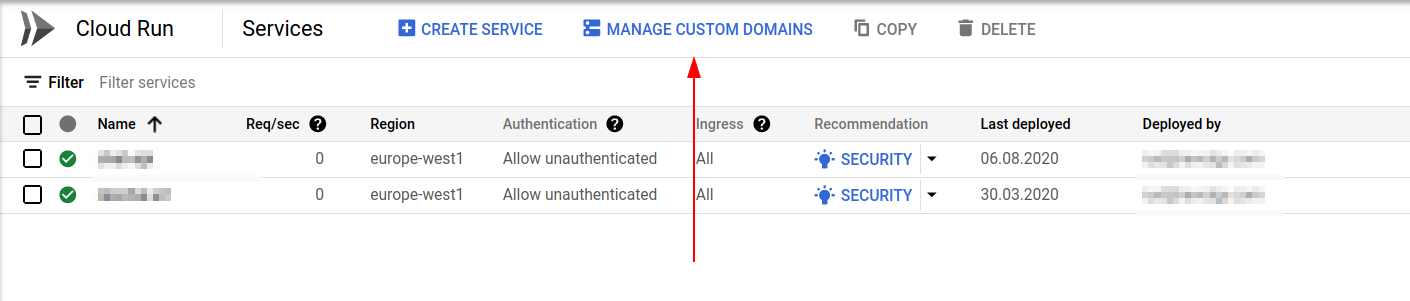

In the end, you should have a Cloud Run service. In the resource page, you can see an automatically generated link to your service - it ends with .run.app.

.png?width=1429&name=Untitled%20(2).png)

You can see that we can see all sorts of metrics, you can go check the logs, etc. etc..

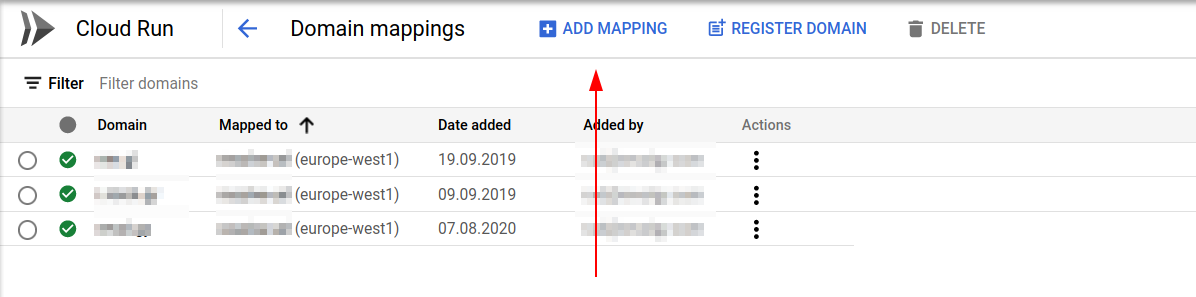

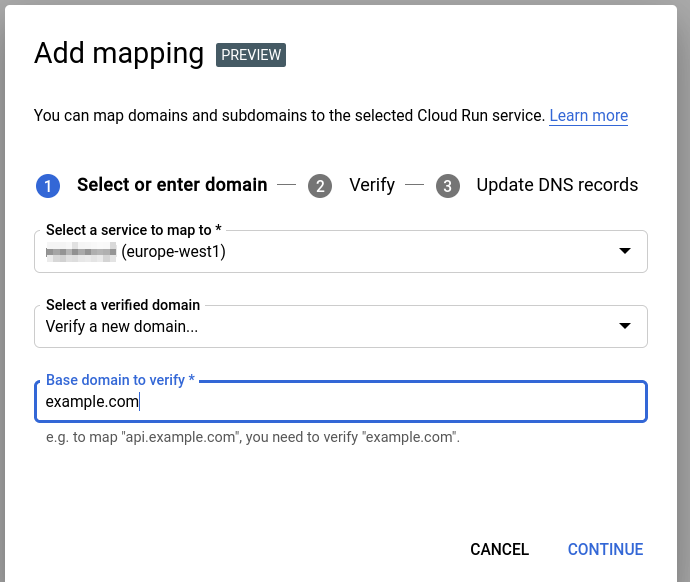

Custom domains

The automatically generated URL is not really a sight to see. You can assign custom domains to your services. You’ll just need to verify that you own the domain and assign some DNS records.

Let me know if you need anything via ask@revolgy.com.

Cheers, Ruda.

FAQs

Q1: What type of application is a perfect fit for Google Cloud Run?

Cloud Run is ideal for applications that are self-contained, stateless, containerized, have a low start time, and are not used 24 hours a day. Typical examples include REST API backends, internal tools and websites, and quick demos.

Q2: What are some examples of applications that should not be run on Cloud Run?

You should not use Cloud Run for stateful applications like SQL databases, applications that require cache warmups (unless they are asynchronous), or applications that need to run multiple processes.

Q3: When would it be more advantageous to use a managed instance group (MIG) of VMs instead of Cloud Run?

Using an autoscaling MIG might be worth it if your application serves multi-millions of requests per day from around the world. Unlike Cloud Run which can scale to zero, a MIG setup typically has at least one VM running constantly to serve requests without the cold-start delay of a new VM. VMs are also directly within a VPC network, which avoids the cost of a Serverless VPC Connector for internal networking.

Q4: How does the App Engine Flexible Environment differ from Cloud Run?

While both can run custom containers, the App Engine Flexible Environment requires you to supply the application separately from the runtime. This allows for a stable runtime container that is completely separate from the application’s code, preventing application developers from being able to change the container file.

Q5: What is the recommended way to manage Cloud Run infrastructure as code?

The most common tool for managing cloud infrastructure as code (IaC) is Terraform. You can define your Cloud Run service and its related IAM policies using HCL (HashiCorp Configuration Language) to create a repeatable and version-controlled deployment process.

Q6: How can you automate the process of building a container and deploying it to Cloud Run after a git push?

You can use Google’s Cloud Build service. By placing a cloudbuild.yaml file in your git repository, you can define steps to build the container image, push it to the Google Container Registry (GCR), and then deploy it as a new revision to your Cloud Run service. This entire process can be triggered to run automatically on a push to the repository.

Q7: What is a key pitfall to avoid when updating a Cloud Run service using Terraform?

When updating the container image URL in your Terraform variables, you must use the full URL including the SHA256 digest (e.g., gcr.io/project/app@sha256:...). If you only use the image tag, Terraform will not detect a change, and a new revision of your service with your latest application changes will not be deployed.

Q8: How must an application be configured to correctly receive requests inside a Cloud Run container?

The application should be coded to listen on the port number that is provided by the PORT environment variable. Cloud Run sends requests to this specific port, so the application should not be hard-coded to listen on a fixed port number.