Managed Services

EKS quickstart

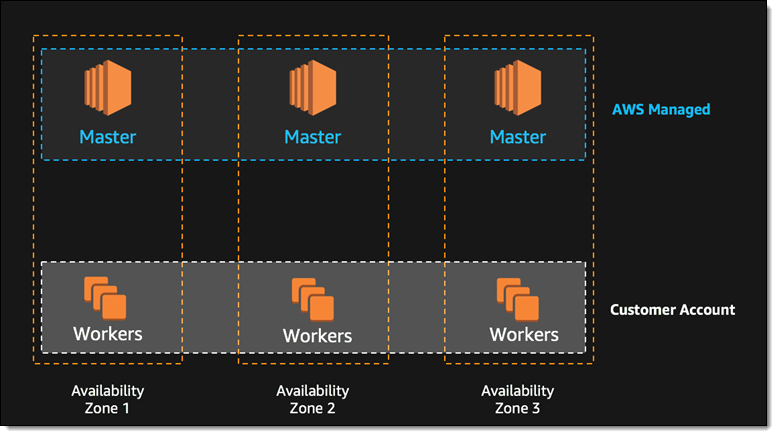

Amazon Elastic Container Service for Kubernetes (Amazon EKS) makes it easy to deploy, manage, and scale containerised applications using Kubernetes on AWS. Amazon EKS runs the Kubernetes management infrastructure for you across multiple AWS availability zones to eliminate a single point of failure. Amazon EKS is certified Kubernetes conformant so you can use existing tooling and plugins from partners and the Kubernetes community. Applications running on any standard Kubernetes environment are fully compatible and can be easily migrated to Amazon EKS. Amazon EKS is generally available for all AWS customers since June 2018.

Companies like Verizon, GoDaddy, Snapchat and SkyScanner are adopting Amazon EKS.

EKS has three master nodes that are managed by AWS

Provisioning

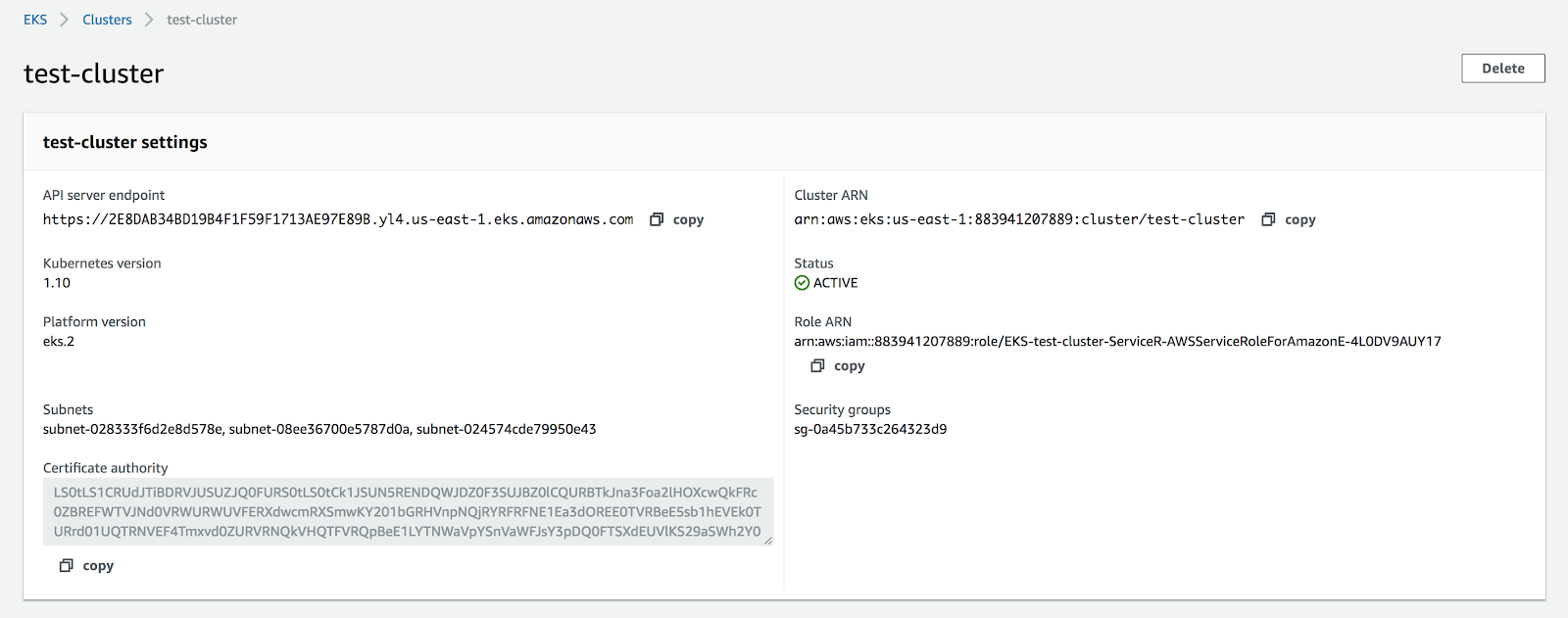

It is possible to provision an EKS cluster with terraform but I’d recommend using eksctl, as it seems to be the easiest way of provisioning the testing cluster and fetching the auth credentials for kubectl at the moment.

$ eksctl create cluster --name=test-cluster --nodes-min=2 --nodes-max=5 --node-type=m5.large --region=us-east-1 --kubeconfig /Users/you/.kube/config.eks

Hit enter and go put the kettle on. It will take about 10 minutes to fully provision the cluster so there’s time for tea.

Make sure your kubectl is no older than 1.10 and verify your kubectl can query the k8s API.

$ export KUBECONFIG=/Users/you/.kube/config.eks

$ kubectl get pods

No resources found.

Install helm on the cluster

Helm is a Kubernetes package manager and a great way for managing kubernetes releases. Next step is to install helm tiller (the server part of helm) on the cluster.

$ cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: v1

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: tiller-role-binding

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

$ kubectl create -f rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller-role-binding created

$ helm init --upgrade --service-account tiller

Install wordpress with helm

We will use the latest stable helm chart for Wordpress https://github.com/helm/charts/tree/master/stable/wordpress

First, create a storageClass so that we can provision a persistent volume with EBS dynamically for MariaDB and Wordpress.

$ cat storage-class.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: gp2

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

reclaimPolicy: Retain

mountOptions:

- debug

$ kubectl create -f storage-class.yaml

storageclass.storage.k8s.io/gp2 created

Then install the release (for some reason the mariadb’s pvc is not picking up the default gp2 storageClass so we will overwrite it manually, I am assuming it is a bug in the helm chart, I’ll take a look at it later)

$ helm install --name wordpress stable/wordpress --set mariadb.master.persistence.storageClass=gp2

Wait a bit for the ELB to get provisioned and open the following url in the browser.

$ open http://$(kubectl get svc wordpress-wordpress -o jsonpath=’{.status.loadBalancer.ingress[*].hostname}’)

Install guestbook

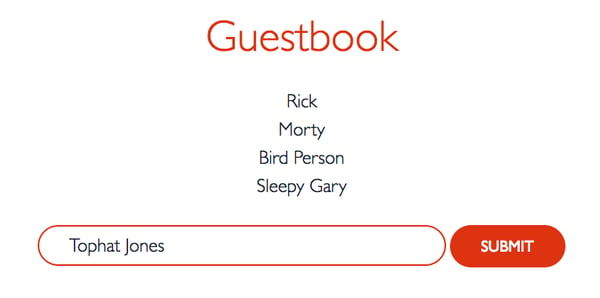

We will install a kubernetes demo application called guestbook. Guestbook is a simple application written in PHP using a Redis cluster to store Guest messages.

$ curl https://raw.githubusercontent.com/netmail/eksctl-demo/master/install-guestbook.sh | bash

Wait again for the ELB to get provisioned and open the following url:

$ open http://$(kubectl get svc guestbook -o jsonpath=’{.status.loadBalancer.ingress[*].hostname}’):3000

Notice the colour changes with every time you refresh the page.

Teardown

Now you can easily delete the cluster with eksctl

eksctl delete cluster test-cluster

Summary

EKS seems to be quite behind AKS and GKE. The future spec of the service is still a bit uncertain, so comparing EKS to AKS or GKE might not even be relevant. GKE and AKS are free (you don’t pay for the master nodes as of September 2018), EKS is charged $0.20 per hour per cluster.

So far EKS is still available in Oregon and North Virginia only. EC2 is the only offered mode. We’re still waiting for Fargate to be a GA on EKS. EC2 autoscaling is not the most convenient, as it uses CPU to scale in-out, but it wouldn’t scale up if there is a pod pending for example because it wouldn’t fit the needs of resource request. On GKE, there is a Cluster Autoscaler to handle the autoscaling of the underlying “IaaS”, on EKS, you have to deploy and handle this yourself.

Working with eksctl is quite smooth, easier than using heptio-authenticator. Definitely check out the tool.

Sources:

https://github.com/netmail/eksctl-demo

FAQs

Q1: What is Amazon EKS (Elastic Container Service for Kubernetes)?

Amazon EKS is a service that makes it easy to deploy, manage, and scale containerized applications using Kubernetes on AWS. It runs the Kubernetes management infrastructure for you across multiple AWS availability zones to eliminate a single point of failure and is certified Kubernetes conformant.

Q2: What is the recommended tool for provisioning a test cluster, and why?

The recommended tool is eksctl. It is considered the easiest way to provision a testing cluster and fetch the authentication credentials needed for kubectl to connect to it.

Q3: What tool is used in the guide to deploy applications like WordPress onto the EKS cluster?

Helm, a Kubernetes package manager, is used to manage and deploy application releases onto the cluster. After installing Helm’s server component (Tiller), you can install applications like WordPress using a Helm chart.

Q4: How is persistent storage for applications handled in the provided example?

To handle persistent storage, a Kubernetes StorageClass is created first. This allows for the dynamic provisioning of a Persistent Volume using AWS Elastic Block Store (EBS) for applications like the MariaDB database and WordPress.

Q5: How do you delete the cluster after testing?

The cluster created with eksctl can be easily deleted using the command eksctl delete cluster [cluster-name].

Q6: At the time the article was written (c. September 2018), how did EKS compare to GKE and AKS?

At that time, EKS was considered to be behind GKE and AKS. Key differences included:

Cost: EKS was charged at $0.20 per hour per cluster, whereas GKE and AKS master nodes were free.

Availability: EKS was only available in the Oregon and North Virginia AWS regions.

Features: Fargate support on EKS was not yet generally available.

Q7: How did EKS cluster autoscaling work compared to GKE’s at that time?

At that time, GKE had a built-in Cluster Autoscaler to handle the underlying infrastructure scaling. On EKS, you had to deploy and manage this capability yourself. The default EC2 autoscaling would not scale up if a pod was pending due to resource requests it couldn’t meet.